Meanwhile, to block IP Address type the following code: Deny from IPADDRESSĦ.

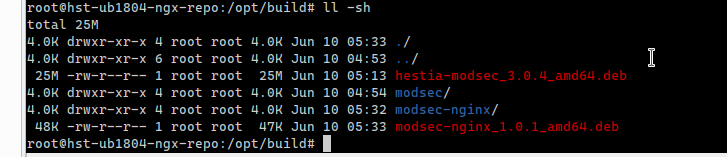

#Remove or add more rules as per your needs.īrowserMatchNoCase “Baiduspider” bad_botsīrowserMatchNoCase “ia_archiver” bad_botsīrowserMatchNoCase “FlipboardProxy” bad_botsOrder Allow,Denyĥ. Buka CPanel, Plesk Panel, ISP Config atau via FTP File.htaccess file is not yet available, please just create the file manually. But if the file already exists, you just need to edit and add the code. htaccess, you need access to the website file manager. If the.

#Block dotbot in .htaccess how to

How to Block IP Addresses and Bad Bots with. Bad Bots does not respond to Rule in Robots.txt.Sometimes cause Overload Resource Server.Causes confusion with traffic analytics metrics.In that case, we can say Bots as User Agent. Where Bot is programmed by a group of institutions, for example Ahrefs, Semrush, Moz, and so on.

While crawling, the bot will do scrapper. Scrapping is what will later be a burden on website resources. He will deliberately access all files for later copying and gathering data to their server. The most common cases are crawler bot and link scrapper. Where is this Crawler will continuously browse all pages until the end of the website. In fact, images and files are also not scanned by Bot Crawler. For details, try to look at the following schema web crawlers: Websites with tens to hundreds of thousands of visitors per day are very vulnerable to badbots .

What is that Bad Bots, User Agent Bot, Crawler, Link Scrapperīad Bots are used for a variety of different purposes, such as scanning, scrapping, DDoS attacks, account takeovers, and many more. Bots can also distort the traffic you get from search engines, make metrics wrong and sometimes cause damage to the system (overload). Not all website visitors are human (human traffic). Sometimes Robots can also visit the website. Like User Agents, Crawlers, Bots and Link Scrapper. The robot is designed with certain algorithms for scanning and scrapping a website. But not infrequently, the scanning or scrapping process will cause an overload on the server resource.Īs a result, our website server becomes an error, resulting in 502 Bad Gateways , 508 Limit Reached or 500 Internal Server Errors (not available). To overcome this, we need to learn how to block Bad Bots IP Addresses using the.

0 kommentar(er)

0 kommentar(er)